FlexMPI

FlexMPI framework

Providing malleable capabilities to MPI applications

FLEX-MPI is a runtime system that extends the functionalities of the MPI library by providing malleable and load balance capabilities to MPI applications. FlexMPI also includes an external controller that manages the execution of multiple (malleable) applications. This management includes capabilities for dynamically changing the application’s number of processes, application monitoring, process-core binding, and I/O scheduling.

FlexMPI source code is available in the download section. We provide the FlexMPI library and the external controller as well as a basic example of a MPI application that alternates CPU, communication and I/O phases. The code repository includes a user manual with a full description of the installation and execution processes.

Visual example

Project goals

- To provide MPI applications of malleable capabilities that permit dynamically increase or decrease the number of processes at execution time.

- To transparently perform the application load balance by redistributing the data when a malleable operation is carried on. Alternatively, load balance can also be triggered by external user commands.

- To provide monitoring capabilities to the applications. The metrics collected include the execution time of each CPU, communication and I/O phase, the amount of data involved in the I/O operations, and other metrics (MIPS, FLOPS, cache misses, etc.) obtained using performance counters.

- An external controller is included to collect monitoring information from the running applications. In addition, the controller is also able to send controls commands to the executing applications. These commands can be provided by the user or by third-party programs and include (among others) actions to spawn or remove processes, to perform process-core binding, and to change the performance counters used to monitor the application.

- Flex-MPI includes an implementation of Clarisse’s I/O scheduling algorithm that coordinates the I/O of the executing applications in a transparent manner.

Framework description

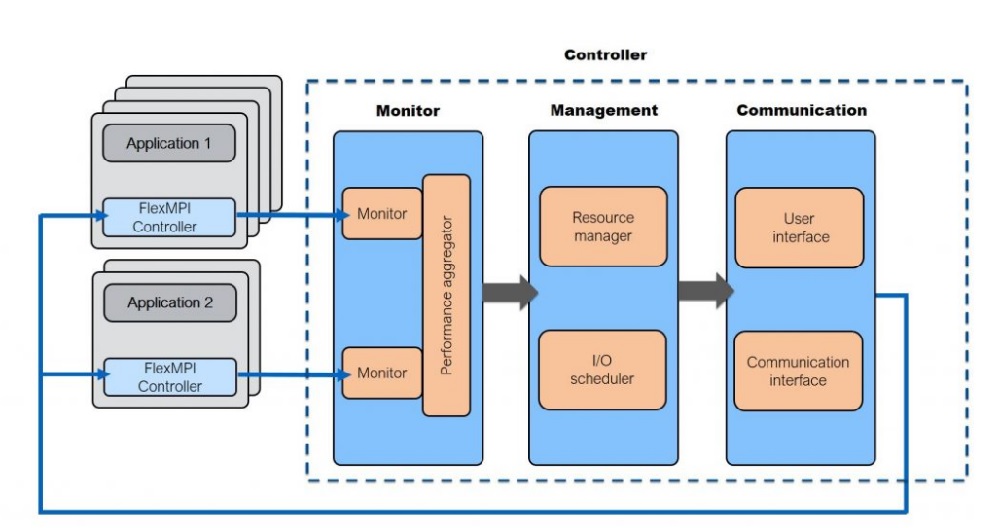

The following figure shows the framework architecture. FlexMPI library is executed with the running applications. FlexMPI receives commands from the external controller and collects and sends several application performance metrics. This is done by using PAPI library and internal timers. Note that FlexMPI includes many features not described in this summary. For a detailed description see the PhD. Thesis Optimization Techniques for Adaptability in MPI Applications.

The controller consists of three components: the monitoring, the management and the communication modules. The monitoring module receives and stores the application performance metrics, that include CPU, communication and I/O times. These metrics can subsequently be used by different optimization policies.

The management module includes a resource manager that assigns the application processes to the existing compute nodes. This is done when a new application is executed or when new processes are created (by means of malleability). The other component of this module is the I/O scheduler, that coordinates the application I/O providing exclusive I/O access to each application.

Finally, the communication module receives the user commands. A full description of these commands can be seen in FlexMPI User Manual. The communication interface is responsible of sending these control commands to FlexMPI library (running with the applications).

Project contributors

At Carlos III University of Madrid: David E. Singh, Jesús Carretero, Javier García Blas and Florín Isaila.

At Barcelona Supercomputing Center: María-Cristina Marinescu

At industry: Gonzalo Martín and Manuel Rodríguez-Gonzalo

Funding

This work has been partially funded by the Spanish Ministry of Science and Education under the contracts MEC 2011/00003/001, TIN2010-16497, TIN2013-41350-P and TIN2016-79637-P “Towards Unification of HPC and Big Data paradigms”. It was also partially supported by EU under the COST Program Action IC1305, Network for Sustainable Ultrascale Computing (NESUS).

Contact

David E. Singh

Address

Departamento de Informática

Universidad Carlos III de Madrid.

Avda. de La Universidad 30. 28911 Leganés. Spain.

Emails

dexposit at inf.uc3m.es